skip to main |

skip to sidebar

Sunday, November 23, 2014

Posted by

Corey Harrell

I went to my local hardware store to buy one of the latest hammers. I brought the hammer home but it was unable to build the shed in my backyard. I spoke to someone else who said something similar. They bought the hammer and it didn't build what they wanted it to do. The expectation was that the hammer should do what it needs to do automatically and if it doesn't then the hammer is broken. It doesn't live up to its expectations, it wasn't the best investment, and the hammer developers need to start over.

Every now and then there are articles about how a certain security technology is broken. The recent focus of criticism is SIEM technology. It's broken because it doesn't do everything automatically and live up to peoples' expectations. The expectation that the technology should be able to be implemented and then automatically solve problems. Similar, to the expectation of a shed being built by simply buying a hammer. The hammer is not at fault; similar to the security technology not being at fault. Both of them are tools and the issue is with who is wielding that tool.

The hammer is not broken. The security technology is not broken. What is broken is the person (or organization) holding the tool. They need the critical thinking and problem solving skills to make the tool do what it needs to do and meet expectations along the way.

"analysts can be trained to use a tool in a rudimen-tary manner, they cannot be trained in the mind-set or critical thinking skills needed to master the tool"

~ Carson Zimmerman

The issue is not with the tool. The issue is the organization hasn't put the tool in the right peoples' hands and until they do the brokenness won't be fixed.

Wednesday, November 5, 2014

Posted by

Corey Harrell

An alert fires about an end point potentially having a security issue. The end point is not in the cubicle next to you, not down the hall, and not even in the same city. It's miles away in one of your organization's remote locations. Or maybe the end point is not miles away but a few floors from you. However, despite its closeness the system has a one terabyte hard drive in it. The alert fired and the end point needs to be triaged but what options do you have. Do you spend the time to physically track down the end point, remove the hard drive, image the drive, and then start your analysis. How much time and resources would be spent approaching triage in this manner? How many other alerts would be overlooked while the focus is on just one? How much time and resources would be wasted if the alert was a false positive? In this post I'll demonstrate how the Tr3secure collection script can be leveraged to triage this type of alert.

Efficiency and Speed Matters

Triaging requires a delicate balance between thoroughness and speed. Too much time spent on one alert means not enough time for the other alerts. Time is not your friend when you are either trying to physically track down a system miles away or imaging a hard drive prior to analysis. Why travel miles when milliseconds will do? Why collect terabytes/gigabytes when megabytes will do? Why spend time doing deep dive analysis until you confirm you have to? The answer to all these questions is to walk the delicate balance between thoroughness and speed. To quickly collect only the data you need to confirm if the alert is a security event or false positive. The tools one selects for triage is directly related to their ability to be thorough but fast. One crop of tools to help you strike this balance are triage scripts.

Triage scripts automate parts of the triage process; this may include either the data collection or analysis. The Tr3secure collection script automates the data collection. In my previous post - Tr3Secure Collection Script Updated - I highlighted the new features I added in the script to collect the NTFS Change Journal ($UsnJrnl) and new menu option for only collecting NTFS artifacts. Triaging the alert I described previously can easily be done by leveraging Tr3Secure collection script's new menu option.

To demonstrate this capability I configure a virtual machine in an extremely vulnerable state then visited a few malicious sites to provide me with an end point to respond to.

Responding to the System

The command prompt was accessed on the target system. A drive was mapped to a network share to assist with the data collection. The network share not only is where the collected data will be stored but it is also where the Tr3Secure collect script resides. The command below uses the Tr3Secure collection script NTFS artifacts only option and stores the collected data in the network share with the drive (Y:). Disclaimer: leveraging network shares does pose a risk but it allows for the tools to be executed without being copied to the target and for the data to be stored in a remote location.

The image below shows part of the output from the command above.

The following is the data that was collected using Tr3secure collection script's NTFS artifacts only option:

- Recentfilecach.bcf

- Prefetch files

- Master File Table ($MFT)

- NTFS Change Journal ($UsnJrnl)

- NTFS Logfile ($Logfile)

Triaging

Collecting the data is the first activity in triaging. The next step is to actually examine the data to confirm if the alert is an actual security event or a false positive. The triage process will vary based on what the event is. A fast spreading worm varies from a Trojan. Antivirus alerts vary from malicious network traffic (i.e. an end point communicating with a malicious IP). The scenario demonstrated in this post is for an alert firing that requires triaging an end point. There are certain indicators to look for when triaging end points for malware as outlined below:

- Programs executing from temporary or cache folders

- Programs executing from user profiles (AppData, Roaming, Local, etc)

- Programs executing from C:\ProgramData or All Users profile

- Programs executing from C:\RECYCLER

- Programs stored as Alternate Data Streams (i.e. C:\Windows\System32:svchost.exe)

- Programs with random and unusual file names

- Windows programs located in wrong folders (i.e. C:\Windows\svchost.exe)

- Other activity on the system around suspicious files

In addition to looking for the above indicators, it is necessary to ask yourself a few important questions.

1. What was occurring on the system around the time the alert generated?

2. Is there any indicators in the alert to use examining the data?

3. For any suspicious or identified malicious code, did it execute on the system?

The triage steps to use is dependent on the collected data. In this case, the data collected with NTFS artifacts option results in only examining: program execution and filesystem activity. These two examination steps are very effective for triaging end points as I previously illustrated in my post Triaging Malware Incidents.

Examining Program Execution Artifacts

The first artifact to check is the Recentfilecach.bcf to determine if any stand-alone programs executed on the endpoint. The file can be examined using a hex editor or Harlan's rfc.exe tool included in his WFA 4/e book materials. The command below parses this artifact:

rfc.exe C:\Data-22\WIN-556NOJB2SI8-11.04.14-19.36\preserved-files\AppCompat\RecentFileCache.bcf

The image below is highlighting a suspicious program. The program stands out since it is a program that executed from the lab user profile's temporary internet files folder. Right off the bat this artifact provides some useful information. The coffee.exe program executed within the past 24 hours, the program came from the Internet using the Internet Explorer web browser, and the activity is associated with the lab user profile.

The second program execution artifact collected during the NTFS artifacts only option are prefetch files. The command below parses this artifact with Winprefetchview:

winprefetchview.exe /folder C:\Data-22\WIN-556NOJB2SI8-11.04.14-19.36\preserved-files\Prefetch

The parsed prefetch files were first sorted by process path to find any suspicious programs. Then they were sorted by last run time to identify any suspicious programs that executed recently or around the time of the alert. Nothing really suspicious jumped out in the parsed prefetch files. However, there was a svchost.exe prefetch file and this was examined since it may contain interesting file handles related to the suspicious program listed in the Recentfilecach.bcf. (to see why review my Recentfilecach.bcf post and pay close attention to where I discuss the process svchost.exe) This could be something but it could be nothing. The svchost.exe process had a file handle to the ad[2].htm file located in the temporarily internet files folder as shown below:

Examining Filesystem Artifacts

The Tr3Secure collection script collects the NTFS $MFT, $Logfile, and $UsnJrnl; all of which can provide a wealth of information. These artifacts can be examined in two ways. First is to parse them and then look at the activity around the time the alert fired. Second, is to parse them and then look at the activity around the time of the identified suspicious files appeared on the system. In this post I'm using the latter method since the alert is hypothetical.

The $MFT was parsed with Joakim Schicht's MFT2CSV program. The output format is in the log2timeline format to make it into a timeline.

The MFT2CSV csv file was imported into Excel and a search was performed for the file "coffee.exe". It's important to look at the activity prior to this indicator and afterwards to obtain more context about the event. As illustrated below, there was very little activity - besides Internet activity - around the time the coffee.exe file was created. This may not confirm how the file got there but it does help rule out certain attack avenues such as drive-by downloads.

The image below shows what occurred on the system next. There is activity of Java executing and this may appear to be related to a Java exploit. However, this activity was due to Java update program executing.

The last portion of the $MFT timeline is shown below. A file with the .tmp extension was created in the temp directory followed by the creation of a file named SonicMaster.exe in the System32 folder. SonicMaster.exe automatically becomes suspicious due to its creation time around coffee.exe (guilty by association.) If this is related then that means the malware had administrative rights, which is required to make modifications to the System32 folder. The task manager is executed and then there was no more obvious suspicious activity in the rest of the timeline. The remaining activity was the lab user account browsing the Internet.

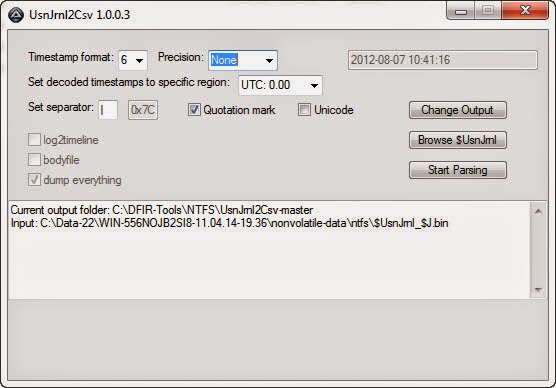

At this point the alert has been properly triaged and confirmed. The event can be escalated according to the incident response procedures. However, addition context can be obtained by parsing the NTFS Change Journal ($UsnJrnl). Collecting this artifact was the most recent update to the Tr3Secure collection script. The $UsnJrnl was parsed with Joakim Schicht's UsnJrnl2CSV program.

The UsnJrnl2CSV csv file was imported into Excel and a search was performed for the file "coffee.exe". It's important to look at the activity prior to this indicator and afterwards to obtain more context about the event. The $UsnJrnl shows the coffee.exe file being dropped onto the system from the Internet (notice the [1] included in the filename.)

The next portion as shown below, shows coffee.exe executing, which resulted in the modification to the Recentfilecache.bcf. Following this the tmp file is created along with the SonicMaster.exe file. The interesting activity is the data overwrites made to the SonicMaster.exe file after it's created.

Immediately after the SonicMaster.exe file creation the data overwrites continued to other programs as illustrated below. To determine what is happening would require those executables to be examined but it appears they had data written to them.

The executables were initially missed in the $MFT timeline since the files by themselves were not suspicious. They were legitimate programs located in their correct folders. However, the parsed $UsnJrnl provided more context about the executables and circling back around to the $MFT timeline shows the activity involving them. Their $MFT entries were updated.

Conclusion

As I mentioned previously, triaging requires a delicate balance between thoroughness but speed. It's a delicate balance between quickly collecting and analyzing data from the systems involved with an alert to determine if the alert is a security event or false positive. This post highlighted an effective technique for triaging an end point and the tools one could use. The entire technique takes minutes to complete from beginning to end. The triage process did not confirm if the activity is a security event but it did determine additional time and resources needs to be spent digging a bit deeper. Doing so would had revealed that the coffee.exe file is malicious and is actually the Win32/Parite file infector. The malware infects all executables on the local drive and network shares. Digging even deeper by performing analysis on coffee.exe (Malwr report and Anubis report) matches the activity identified on the system and even provides more indicators (such as an IP address) one could use to search for other infections in the environment.

Sunday, November 2, 2014

Posted by

Corey Harrell

It was a little over four years ago I started a new journey. The timing wasn't the best when I took my first step into the blogging world. My family welcomed our third son into the world, I was doing challenging work for my employer, and I was still pursuing my Masters degree attending school full time. Needless to say, starting a blog would just compete for the little spare time I had. After talking things over with my wife I took the first step by launching this blog: Journey Into Incident Response (jIIr.) I've been reflecting about this journey since jIIr has surpassed 500,000 page views.

So much has changed in the past four years. I started out with my sights set on incident response and now I'm building out and managing an enterprise-wide incident response and detection program. I have been sharing my journey, my research, and thoughts on jIIr for others to read and learn from. To those who either read one post or numerous posts I wanted to say thank you. Thank you for taking the time to read what I have written. To those who went one step beyond to leave a comment or send me an email I wanted to thank you as well. Blogging is a challenging endeavor and comments are very encouraging.

jIIr started out as a way for me to share back to the digital forensic and incident response community. Shortly after jIIr's launch my motivations changed. What drove me to maintain the blog was no longer sharing back to the community. It's not necessary to share my motivations because at the end of the day is what matters the most is my ability to help others by sharing what I do. Each jIIr post is an effort to help anyone who may read it. For them to take the information and apply it in their lives and/or work. To those people and organizations who have shared jIIr content either by linking to my posts, retweeting my tweets, forwarding along links, or sharing your thoughts on your own websites. Thank you. Thank you for sharing; jIIr would not be reaching the audience it is today without your support. To those who continue to share jIIr content, I cannot say how grateful I am.

The past four years have been a humbling experience. God has blessed my with a passion for DFIR and the ability to communicate in the written form. For as long as I'm capable, I'll continue to do what I do so at the end of the day others who stumble upon this site may be helped in some way.

Labels: