skip to main |

skip to sidebar

Tuesday, December 17, 2013

Posted by

Corey Harrell

The Application Experience and Compatibility feature ensures compatibility of existing software between different versions of the Windows operating system. The implementation of this feature results in some interesting program execution artifacts that are relevant to Digital Forensic and Incident Response (DFIR). I highlighted a Windows 7 artifact in the post Revealing the RecentFileCache.bcf File and Yogesh Khatri highlighted a Windows 8 artifact in his post Amcache.hve in Windows 8 - Goldmine for malware hunters. However, there are still even more artifacts associated with this feature and the AppCompatFlags registry keys are one of them. This post provides some additional information about these registry keys in the Windows 7 and 8 operating systems and the relevance of the data stored within them.

Exploring the Program Compatibility Assistant

The Program Compatibility Assistant (PCA) is another technology the Windows operating system uses to ensure software compatibility between different Windows versions. The Windows Internals, Part 1: Covering Windows Server 2008 R2 and Windows 7 in the built-in diagnostic utilities section states the following about PCA:

Program Compatibility Assistant (PCA), which enables legacy applications to execute on newer Windows versions despite compatibility problems. PCA detects application installation failures caused by a mismatch during version checks and run-time failures caused by deprecated binaries and User Account Control (UAC) settings. PCA attempts to recover from these failures by applying the appropriate compatibility setting for the application, which takes effect during the next run. In addition, PCA maintains a database of programs with known compatibility issues and informs the users about potential problems at program startup.

The Program Compatibility Assistant is a Windows service. It's service display name is Program Compatibility Assistant Service, service name is PcaSvc and its default description states "this service provides support for the Program Compatibility Assistant (PCA). PCA monitors programs installed and run by the user and detects known compatibility problems. If this service is stopped, PCA will not function properly." The screenshot below shows the Windows 7 PCA service's properties including the path to the executable which is "svchost.exe -k LocalSystemNetworkRestricted."

The article The Program Compatibility Assistant - Part Two goes into a little more detail about how PCA works. It mentions the following:

"When a user launches a program, if that program is on a list of programs that are known to have compatibility issues, then PCA informs the user of this. This list is maintained in the System Application Compatibility Database. Depending on the nature of the issue, the application may be Hard Blocked or Soft Blocked."

A hard block prevents the application from running or installing while a soft block indicates the program has known compatibility issues. The process creation stages were detailed in the post Revealing the RecentFileCache.bcf File; if you are not familiar with the process it's recommend to read the article.

Exploring the HKU AppCompatFlags Layers Registry Keys

The Program Compatibility Assistant uses compatibility modes to help programs run on Windows. These compatibility modes are set in the following registry keys (which one is used depends on if the setting is for all users or the current user):

HKLM\ Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Layers

HKCU\Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Layers

For each compatibility setting there is a registry value listed beneath the Layers registry key. The value contains: the path to the executable and the compatibility mode being applied. To illustrate how this works I'll walk through the FTK imager installation process on a Windows 7 system. The picture below shows FTK imager's file properties with the Windows XP (Service Pack 3) compatibility mode selected. This setting only applies to the current user; for it to apply to all users the "Change settings for all users" must be used.

After applying the compatibility setting the registry value is updated beneath the Layers key as shown below.

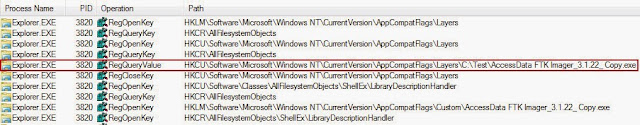

This compatibility setting is queried during the process creation stages. The image below shows Explorer.exe starting the AccessData FTK Imager_3.1.22_Copy.exe process.

The image below shows the compatibility setting being queried during the process creation.

Exploring the Windows 7 AppCompatFlags Persisted Registry Key

The article The Program Compatibility Assistant - Part Two goes into further detail about how PCA works. Besides the Layers registry key, " PCA stores the list of all programs for which it came up under the following key for each user, even if no compatibility modes were applied (in other words, the user indicated that the program worked correctly)." In Windows 7, it appears that PCA records the programs that have an installation routine inside the user account registry hive it executed under. The registry location is:

HKCU\Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Compatibility Assistant\Persisted

To illustrate how this works I'll again walk through the FTK imager installation process on a Windows 7 system. (note: I tested various programs without installation routines but none of them were recorded in the Windows 7 Persisted registry key). The image below shows the FTK imager process starting.

The next image shows the Application Experience service modifying the RecentFileCache.bcf file since the AccessData FTK Imager_3.1.22.exe file was recently created.

Up to this point in the process creation it is very similar to what I described in the post about the RecentFilecache.bcf file. However, the next image shows the PCA process becoming more active.

The properties of the svchost.exe process confirms it is in fact the Program Compatibility Assistant service as shown below:

The PCA service performs various activities as the FTK Imager application starts to load. One of those activities is querying the registry key HKCU\Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Compatibility Assistant\Persisted as shown below:

Shortly thereafter the PCA service modifies the Persisted registry key as shown below.

This modification is to record the AccessData FTK Imager_3.1.22.exe file that executed on the system. The other programs listed in the Persisted registry key all had installation routines when they executed.

Exploring the Windows 8 AppCompatFlags Store Registry Key

In Windows 8, the Program Compatibility Assistant appears to function similar to the process I described previously. However, there is one key difference in Windows 8. It appears that PCA records all third party programs inside the user account registry hive it executed under regardless if it has an installation routine or not. The Persisted registry key is no longer present and the data is stored in the location below:

HKCU\Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Compatibility Assistant\Store

To illustrate how this works I'll through the Process Explorer program executing on a Windows 8 system. Process Explorer is a standalone program so it doesn't have an installation routine. The walkthrough I'm doing is very similar to programs with installation routines running on Windows 8. The image below shows the Process Explorer starting.

For brevity I'm excluding other images in the process creation and only showing the activity related to PCA. The image below shows PCA accessing the application compatibility database.

PCA continues by querying the File registry key inside the Amcache.hve registry hive. In Windows 8, this registry hive replaces the RecentFilecache.bcf file. For more information about this new artifact refer to the posts: Yogesh Khatri's Amcache.hve in Windows 8 - Goldmine for malware hunters and Amcache.hve - Part 2.

PCA queries various locations as Process Explorer starts to load; one of them is the registry key HKCU\Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Compatibility Assistant\Store as shown below.

The other activity PCA does with the store registry key is to record information about Process Explorer as shown below.

Looking at the registry values in the Store registry key shows it contains references to various third party applications. The applications are ones without installation routines (procmon.exe, Tweb.exe, and procexp.exe) as well as ones with installation routines (FTK imager and FoxIt). The Store registry key even ties applications to Internet Explorer (Tweb.exe).

AppCompatFlags Registry Keys' Relevance

The AppCompatFlags registry keys' are another artifact that shows program execution. The following are some of the relevant registry keys:

HKLM\ Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Layers

HKCU\Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Layers

HKCU\Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Compatibility Assistant\Persisted

HKCU\Software\Microsoft\Windows NT\CurrentVersion\AppCompatFlags\Compatibility Assistant\Store

The relevance of the executables listed in these keys mean the following:

1. The program executed on the system.

2. The program executed on the system under the user account where the keys are located

Monday, December 2, 2013

Posted by

Corey Harrell

The Application Experience and Compatibility feature is considered one of the pillars in the in Microsoft Windows operating systems. Microsoft states in reference to the Microsoft Application Compatibility Infrastructure (Shim Infrastructure) "as the Windows operating system evolves from version to version changes to the implementation of some functions may affect applications that depend on them." This feature is to ensure compatibility of existing software between different versions of the Windows operating system. The implementation of this feature results in some interesting program execution artifacts that are relevant to Digital Forensic and Incident Response (DFIR). Mandiant highlighted one artifact in their Leveraging the Application Compatibility Cache in Forensic Investigations article. However, there are more artifacts associated with this feature and the RecentFileCache.bcf file is one of them. This post provides some clarification about the file and the relevance of the data stored within it.

Exploring the Application Experience and Compatibility Feature

Microsoft further states why the Application Experience and Compatibility feature is needed:

"Because of the nature of software, modifying the function again to resolve this compatibility issue could break additional applications or require Windows to remain the same regardless of the improvement that the alternative implementation could offer."

The Shim Infrastructure allows for those broken applications to be fixed and ensures they are compatible with newer versions of Windows. The Shim Infrastructure leverages API hooking to accomplish this. When there are calls to external binary files the calls get redirected to the Shim Infrastructure as illustrated below:

Alex Ionescu's goes into more detail about what happens when the external binary calls get redirected to the Shim Infrastructure in his post Secrets of the Application Compatilibity Database (SDB) – Part 1. "As the binary file loads, the loader will run the Shim Engine, which will perform lookups in the system compatibility database, recovering various information." The compatibility database is named sysmain.sdb and is located in the C:\Windows\AppPatch directory. sysmain.sdb contains information "that details how the target operating system should handle the application" and covers more than 5,000 applications. Furthermore, Alex states " on top of the default database, individual, custom databases can be created, which are registered and installed through the registry."

There is very little information available about what is the Windows process that the loader calls to perform the compatibility database checks. However, monitoring the Windows operating system with Process Monitor identified one of the Shim Infrastructure processes being the Application Experience.

Application Experience is a Windows service. It's service display name is Application Experience, service name is AeLookupSvc and its default description states it "processes application compatibility cache requests for applications as they are launched." Another reference mentions "this service checks a Microsoft maintained database for known problems with popular programs and automatically enables workarounds, either at first installation (using UAC) or at application launch." The service performs these checks as applications are launched (on Windows XP this was handled by the Application Experience Lookup service). The screenshot below shows the Application Experience service's properties including the path to the executable which is "svchost.exe -k netsvcs."

In addition to the Application Experience service, there are two Windows tasks involved with the Application Experience and Compatibility feature. The two default tasks for Application Experience are named AitAgent and ProgramDataUpdater. The description for the AitAgent task states it "aggregates and uploads Application Telemetry information if opted-in to the Microsoft Customer Experience Improvement Program." The second and more important task as it relates to the RecentFilecache.bcf file is the ProgramDataUpdater. It's description states it "collects program telemetry information if opted-in to the Microsoft Customer Experience Improvement Program." The other item to note about this task is its action which is to start the following program: %windir%\system32\rundll32.exe aepdu.dll,AePduRunUpdate. As with every Windows task there is information about when the task is scheduled to run and when it last ran. The screen below shows this information for the Application Experience tasks.

The one thing to keep in mind is since it impacts the RecentFileCache.bcf file is that the ProgramDataUpdater task is scheduled to run every day at 12:30 AM.

Exploring Process Creation on Windows

The Application Experience and Compatibility feature comes into play as a program starts. To fully explore how this feature works it is necessary to review how processes are created on Windows and what occurs when the loader runs the Shim Engine. The Windows Internals, Part 1: Covering Windows Server 2008 R2 and Windows 7 outlines the main stages of process creation:

1. Validate parameters; convert Windows subsystem flags and options to their native counterparts; parse, validate, and convert the attribute list to its native counterpart.

2. Open the image file (. exe) to be executed inside the process.

3. Create the Windows executive process object.

4. Create the initial thread (stack, context, and Windows executive thread object).

5. Perform post-creation, Windows-subsystem-specific process initialization.

6. Start execution of the initial thread (unless the CREATE_ SUSPENDED flag was specified).

7. In the context of the new process and thread, complete the initialization of the address space (such as load required DLLs) and begin execution of the program.

The picture below illustrates the process creation main stages:

The loader runs the Shim Infrastructure in stage 5 of the process creation which is where performing Windows subsystem-specific-post-initialization occurs. This is where specific operations are performed to finish initializing the process. According to the Windows internal book, one of those operations is "the application-compatibility database is queried to see whether an entry exists in either the registry or system application database for the process."

Exploring this portion of the process creation with Process Monitor reveals the files, registry keys, and processes involved with the database being queried. To demonstrate I'll follow the lead of the Windows Internal book by using the built-in Windows program notepad.exe. Notepad.exe was invoked by double clicking it; this means Explorer.exe is process that loads notepad.exe.

Explorer.exe performs other activity as illustrated below:

The Windows Internal book states the following about this activity:

"The first is a simple check for application-compatibility flags, which will let the user-mode process creation code know if checks inside the application-compatibility database are required through the shim engine."

In addition the book goes on to say

"you might see I/O [input/output] to one or more .sdb files, which are the application-compatibility databases on the system. This I/O is where additional checks are done to see if the shim engine needs to be invoked for this application."

Exploring the RecentFileCache.bcf File

The Application Experience and Compatibility feature is implemented through the Shim Infrastructure. This Shim Infrastructure is invoked during process creation when an application is launched. However, there was no mention about the RecentFileCache.bcf file located in the C:\Windows\AppCompat\Programs directory on Windows 7 systems. I first became aware about this file when processing malware cases. I kept seeing references (full path names to executables) to malware in the RecentFileCache.bcf file. The file was different than the Shim Cache Mandiant spoke about. The Shim Cache contains references to numerous programs over an extended period of time. The RecentFileCache.bcf file on the other hand only contained references to programs that recently executed. The reason for this is because the RecentFileCache.bcf file is a temporary storage location used during the process creation. It appears this storage location is not used during all process creation; it's mostly used for those processes that spawned from executables which were recently copied or downloaded to the system.

Extensive testing was performed to shed light on the actions which lead to executables names being listed in the RecentFileCache.bcf file. The testing included executing the same program the following ways:

- executing program already on the system

- renaming and then executing the program already on the system

- executing the program from a removable media

- copying the program to the system and then executing it

- downloading the program with a web browser and then executing it

- copying a different program that has an installation process and then executing it

The process creation process varied from what was described previously when it involved an executable that was recently created on the system (either through copying or downloading). The best way to illustrate this difference is by demonstrating it. The picture below shows part of the copying process moving E:\Tcopy.exe to C:\Tcopy.exe.

The next picture shows the part of the process creation where the application compatibility database is checked after C:\Tcopy.exe was executed from the command prompt.

Next there are checks for the application compatibility flags.

To this point the Shim Infrastructure being invoked during process creation resembles what was described previously. However, the next picture illustrates where the process creation starts to deviate. Shortly after the sysmain.sdb database is closed a scvhost.exe process becomes more active.

The properties of the svchost.exe shows it is the Application Experience service since it's command line is "svchost.exe -k netsvcs."

The Application Experience service performs various operations. One of its operations records the executable's full path in the RecentFileCache.bcf file as shown below:

The structure of the RecentFileCache.bcf file is fairly basic. Each entry only has the file path length and file path itself.

Harlan Carvey wrote a Perl script to parse the RecentFileCache.bcf file; his script makes it easier to read its contents than using a hex editor. Below is the script's output parsing a file from a test system:

c:\windows\devcon\64bits\devconx64.exe

c:\tcopy.exe

c:\users\ anon\appdata\local\microsoft\windows\temporary internet files\content.ie5\hjhf58cm\tweb.exe

Application Experience and RecentFileCache.bcf

The Application Experience service records the file names of executables in the RecentFileCache.bcf file. These executables were newly created on the system whether if it was copied, downloaded, or extracted from another executable. The storage of this information in the RecentFileCache.bcf file is only temporary since it is cleared when the Application Experience ProgramDataUpdater task is ran.

side note: I have seen references to executables that executed from removable media (i.e. my collection tools) as well as executables that were present on the system but never executed referenced in this artifact. However, I was unable to reproduce either with my testing.

Continuing on with the demonstration, the system was monitored to see what else interacted with the RecentFileCache.bcf file. The services.exe started the Application Experience ProgramDataUpdater task as shown in the two screenshots below.

Shortly thereafter, the Application Experience service started interacting with the ProgramDataUpdater task as shown below.

Further monitoring of the ProgramDataUpdater task showed it accessed the RecentFileCache.bcf file and cleared its contents.

The ProgramDataUpdater task runs at least once a day and knowing it clears the cache means the programs listed in the RecentFileCache.bcf file executed fairly recently. The ProgramDataUpdater task also interacted with the xml file stored inside the AppCompat\Programs folder. This xml file appears to contain the program's publisher information.

RecentFileCache.bcf File's Relevance

The RecentFileCache.bcf file is another artifact that shows program execution. I have found this artifact helpful when investigating systems shortly after they became infected. The artifact is a quick way to locate malware - such as droppers and downloaders - on the system as I briefly mentioned in the post Triaging Malware Incidents. The relevance of the executables listed in this artifact mean the following:

1. The program is probably new to the system.

2. The program executed on the system.

3. The program executed on the system some time after the ProgramDataUpdater task was last ran.

*** Update ***

In the Windows 8 operating system the RecentFilceCache.bcf has been replaced by a registry hive named Amcache.hve. Yogesh Khatri digs into this new artifact and provided an excellent overview about what it contains in the post Amcache.hve in Windows 8 - Goldmine for malware hunters. The Amcache.hve stores a ton of information compared to the RcentFileCache.bcf; one of which are executables SHA-1 hashes.

Wednesday, November 20, 2013

Posted by

Corey Harrell

Due

to changes with my employer last Spring my new responsibilities include all

things involving incident response. I won’t go into details about what I’m doing for

my employer but I wanted to share some linkz I came across. Similar to my

responsibilities, these linkz include all things involving incident response.

Enjoy ….

Incident

Response Fundamentals

What

better way to start out an Incident Response Linkz post than by providing

series discussing incident response fundamentals. Securosis wrote an Incident

Response Fundamental series about incident response. The topics covered include:

-

Introduction, Data Collection/Monitoring Infrastructure

-

Incident Command Principles

-

Roles and Organizational Structure

-

Response Infrastructure and Preparatory Steps

-

Before the Attack

-

Trigger, Escalate, and Size up

-

Contain, Investigate, and Mitigate

-

Mop up, Analyze, and QA

The

links to all these articles can be found on the Incident

Response Fundamentals: Index of Posts. Please note some of the links are

broken on the index page and I did find a quick work around. When you see this

go to the next article in the series since the first paragraph properly links

to the previous article.

Doing Incident

Response Faster

Building

on their fundamental series Securosis released the React

Faster and Better: New Approaches for Advanced Incident Response paper.

Despite being a few years old, the information is still relevant today. To

illustrate the paper’s focus I’ll quote from the Introduction article in their

fundamentals series:

“We

need to change our definition of success from stopping an attack (which would

be nice, but isn’t always practical) to reacting faster and better to attacks,

and containing the damage.

We’re

not saying you should give up on trying to prevent attacks – but place as much

(or more) emphasis on detecting, responding to, and mitigating them.”

The

React Faster and Better: New Approaches for Advanced Incident Response paper discusses how they think you can perform incident response faster and

better.

Incident

Response's Evolution

Anton

Chuvakin tackled incident response as a research project. He wrote a paper on

the subject that is only available with a Gartner subscription. However, he was

frequently blogging about his research and thoughts along the way. The one

thing I noticed in his research that aligns with some of the other links I'm

sharing. Incident response has been evolving into a continuous process. It involves

constantly monitoring to detect compromises, triaging alerts, responding to

incidents, and improving detection using the discovered indicators. As Anton

mentioned in his Death of a Straight Line article, it's no longer a linear

process with a start and finish. It now resembles having multiple loops going

on at the same time. Below are a few of his blog posts

On

Importance of Incident Response

http://blogs.gartner.com/anton-chuvakin/2013/07/15/on-importance-of-incident-response/

Incident

Response: The Death of a Straight Line

http://blogs.gartner.com/anton-chuvakin/2013/06/05/incident-response-the-death-of-a-straight-line/

On

Three IR Gaps

http://blogs.gartner.com/anton-chuvakin/2013/08/20/on-three-ir-gaps/

Incident

Plan vs Incident Planning?

http://blogs.gartner.com/anton-chuvakin/2013/07/23/incident-plan-vs-incident-planning/

Top-shelf

Incident Response vs Barely There Incident Response

http://blogs.gartner.com/anton-chuvakin/2013/08/09/top-shelf-incident-response-vs-barely-there-incident-response/

Fusion

of Incident Response and Security Monitoring?

http://blogs.gartner.com/anton-chuvakin/2013/08/15/fusion-of-incident-response-and-security-monitoring/

Integrating SIEM

with Incident Response

The AlienVault SIEM

for ITIL-Mature Incident Response (Part 1) paper touches on how you can use

a SIEM and log correlation to accomplish various things. One of which is to “develop

an Incident Response process that includes a significant portion of repeatable,

measurable and instructable processes.” The paper lays the groundwork –such as

covering incident response implementations and it not being tech support – for

the second part of the paper.

I found their second paper -

SIEM

for ITIL-Mature Incident Response (Part 2) - to be the more interesting of the two. The

paper goes into detail about evolving incident response into a mature service

model. It accomplishes this by applying the five states of capability to the

incident response process. The descriptions are accompanied by diagrams to

better illustrate the activities and workflow for each stage.

Practical

Plans for Incident Response

The next link isn’t to a resource freely available on the Internet

but an outstanding book about incident response. There is a lot of information

about the incident response process as well as technical information about

carrying out the process. However, there is very little information about

incident response plans an organization can leverage for their internal IR

process. The book The

Computer Incident Response Planning Handbook: Executable Plans for Protecting

Information at Risk is loaded with practical information to help build or

improve your incident response plans. I plan to do a proper book review at some

point but I wanted to at least mention it in this linkz edition.

Integrating

Malware Analysis with Malware Response

Securosis

Malware Analysis Quant research is a very interesting project. The purpose of

the project in their words was to “designed Malware Analysis Quant to

kick-start development of a refined and unbiased metrics model for confirming

infection from malicious software, analyzing the malware, and then detecting

and identifying proliferation within an organization.” Now setting the metrics

stuff aside the reason I really like the paper is because of the process it

outlines. It discusses confirming an infection, analyzing the malware, and then

identifying other systems (malware proliferation). When looking at all of the

literature available about incident response the one area lacking is practical

information one can use to scope an incident. This paper provides some good

information about the options for scoping a malware incident.

Malware

Analysis Quant [Final Paper]

https://securosis.com/blog/malware-analysis-quant-final-paper

Link

to the Final Paper

https://securosis.com/assets/library/reports/Securosis-MAQuant-v1.4_FINAL.pdf

Responding

to Malware Infected Systems

Claus Valca over at grand stream dreams put together an

outstanding post about malware response; the post is Anti-Malware

Response “Go-Kit”. Claus goes into detail about the process he uses when

responding to an infected system. The thing I really like about this post is he

discusses the process and tools he uses. I enjoy seeing how others approach the

same issue since I can learn a thing or two. To top it off the post contains a

wealth of great links to articles and tools. This is one article you will want

to take the time to read.

Memory Forensics

to the Rescue

Rounding

out this linkz post is an excellent write-up by Harlan Carvey. In his post Sniper

Forensics, Memory Analysis, and Malware Detection Harlan goes into detail

about a recent examination he performed. He was faced with an IDS alert and a

laptop. By using a focused approach, converting a hibernation file into raw

image, and performing memory forensics he was able to solve the case. Similar to

Claus, this is another great post highlighting how someone addressed an issue

with available tools. I see so much value in sharing this kind of information

because not only do I learn but I can improve my own process. You’ll definitely

want to check out this write-up.

Wednesday, October 23, 2013

Posted by

Corey Harrell

In the past I briefly mentioned the Vulnerability

Search but I never did a proper introduction. Well, consider this post its

formal introduction. The Vulnerability Search is a custom Google that indexes

select websites related to software vulnerabilities. Unlike the Digital

Forensic Search where I’m trying to include as many DFIR sites as possible the

Vulnerability Search takes the opposite approach. The search is only indexing a

select few websites; websites with information about exploits or

vulnerabilities. I have found the Vulnerability Search to be useful so I wanted

to share how I use it for incident response and information security

activities.

Incident Response Triaging

The Vulnerability Search excels at triaging potential

incidents involving web applications, websites, or backend databases. Let’s say

you receive an alert indicating one of your web applications is being banged on

by some threat. The alert can be detected by anything; IDS, SIEM, or a server administrator.

When this type of alert comes in one question that needs to be answered is: did

the attack successfully compromise the server. If the server is compromised

then the alert can be elevated into a security incident. However, if the

ongoing attacks have no chance of compromising the server then there’s no need

for elevation and the resources it requires. This is where the Vulnerability

Search comes into play.

The web logs will contain the URLs being used in the

attack. If these URLs are not completely obfuscated then they can be used to

identify the vulnerability the threats are targeting. For example, let’s say

the logs are showing the URL below multiple times in the timeframe of interest:

hxxp://journeyintoir.blogspot.com/index.php?option=com_bigfileuploader&act=uploading

It might not be obvious what the URL’s purpose is or what it’s

trying to accomplish. A search using part of the URL can provide clarity about

what is happening. Searching on the string “index.php?option=com_bigfileuploader”

in the Vulnerability Search shows the vulnerability being targeted is the Joomla

Component com_bigfileuploader Arbitary File Upload Vulnerability. Now if

the website in question isn’t a Joomla server then the attack won’t be successfully

and there is no need to elevate the alert.

Incident Response Log Analysis

The Vulnerability Search also excels at investigating incidents

involving web applications, websites, or backend databases. Let’s say someone

discovered a web server compromise since it was serving up malicious links. The

post mortem analysis identified a few suspicious files on the server. The web activity

in an access log around the time the files were created on the server showed

the following:

"POST /%70%68%70%70%61%74%68/%70%68%70?%2D%64+%61%6C%

6C%6F%77%5F%75%72%6C%5F%69%6E%63%6C%75%64%65%3D%6F%

6E+%2D%64+%73%61%66%65%5F%6D%6F%64%65%3D%6F%66%66+%

2D%64+%73%75%68%6F%73%69%6E%2E%73%69%6D%75%6C%61%

74%69%6F%6E%3D%6F%6E+%2D%64+%64%69%73%61%62%6C%65%

5F%66%75%6E%63%74%69%6F%6E%73%3D%22%22+%2D%64+%6F%

70%65%6E%5F%62%61%73%65%64%69%72%3D%6E%6F%6E%65+%

2D%64+%61%75%74%6F%5F%70%72%65%70%65%6E%64%5F%66%69%

6C%65%3D%70%68%70%3A%2F%2F%69%6E%70%75%74+%2D%6E

HTTP/1.1" 200 203 "-"

A search on the above string reveals it’s an exploit for the

Plesk Apache Zeroday Remote

Exploit. If the server in question is running Plesk then you might have just

found the initial point of compromise.

Vulnerability Management or Penetration Testing

The Vulnerability Search is not only useful for DFIR type

work but it’s also useful for vulnerability management and penetration testing

type work. Let’s say you get a report from a vulnerability scanner and it has a

critical vulnerability listed. You can use search on the CVE to get clarity

about what the vulnerability is, what the vulnerability allows for if

exploited, and what exploits are available. All of this information can help

determine the true criticality of the vulnerability and the timeframe for the

vulnerability to be patched.

Now on the other hand let’s say you are doing a pen test and

you identify a vulnerability with your tools. You can search on

the CVE to get clarity about what the vulnerability is, what the

vulnerability allows for if exploited, and what exploits are available. This

type of information can be helpful with exploiting the vulnerability in order to

elevate your privileges or access sensitive data.

The purpose of this post was to illustrate what the Vulnerability Search is and how I use it. The examples I used might have been for demonstration

purposes but they simulate scenarios I’ve encountered where the search came

in handy.

Sunday, October 6, 2013

Posted by

Corey Harrell

In this day and age budgets are shrinking, training funds

are dwindling, and the threats we face continue to increase each day. It's not

feasible to solely rely on training vendors to get your team up to speed. Not

only does it not make sense economically but for your teams to increase and

maintain their skills they need to be constantly challenged. In this edition of

linkz I'm linking to free training resources one can use to increase their own

or their team's skills.

This post may be one you want to bookmark since I'm going to

keep it up to date with any additional free online training resources I come

across.

ENISA CERT Exercises and training material

The ENISA CERT has some exercises

and training material for computer security incident response teams

(CSIRTs). The material covers a range of topics such as: triage & basic

incident handling, vulnerability handling, large scale incident handling, proactive

incident detection, and incident handling in live role playing. This material

will be of use to those wanting to do in-house training for people who are

responsible or involved with responding to and/or handling security incidents.

Open Security Training

Open Security Training.info has posted some outstanding

information security training. To demonstrate the depth of what is

available I'll only touch on the beginner courses. These include: Introductory Intel x86:

Architecture, Assembly, Applications, & Alliteration, Introduction to Network

Forensics, Introduction

to Vulnerability Assessment, Offensive, Defensive,

and Forensic Techniques for Determining Web User Identity, and Malware

Dynamic Analysis. If anyone is looking to take free security training then

Open Security Training should be your first stop.

SecurityXploded Malware Analysis Training

The SecurityXploded website also offers free malware

analysis training. The current offerings are Reverse

Engineering & Malware Analysis Training and Advanced

Malware Analysis Training. For anyone wanting to explore malware analysis

then one of these courses may be helpful.

DHS/FEMA Online Security Training

The next resource provides various security courses by the DHS/FEMA Certified Online Trainingover at the TEEX Domestic Preparedness Campus . The courses offered on this

site aren't as technical as the other resources I'm linking to. However, the

content shouldn't be overlooked with topics such as: Cyber Incident Analysis

and Response, Information Security Basics, Information Risk Management, and

Secure Software. These courses are not only useful for people who are on a

security team but I can see these being beneficial for anyone wanting to know

more about security.

College Courses on Coursera

"Coursera is an

education company that partners with the top universities and organizations in

the world to offer courses online for anyone to take, for free." The

courses available are on a range of subjects; just like the offerings at your

local universities. As it relates to InfoSec and IT, there are courses in

Computer Science, Information Technology, and security related topics.

Microsoft Virtual Academy

The next resource will definitely be useful for anyone

wanting to learn more about Microsoft's technology. The " Microsoft

Virtual Academy (MVA) offers online Microsoft training delivered by experts to

help technologists continually learn, with hundreds of courses, in 11 different

languages." The available courses are on a range of technologies

including: Windows, Windows Server, Server Infrastructure, and Virtualization.

One of the more interesting courses - as it relates to incident response- is

the Utilizing

SysInternals Tools for IT Pros course.

PentesterLab

The next resource is on the offensive side of the security

house. " PentesterLab is an easy and great way to learn penetration

testing." " PentesterLab provides vulnerable systems that can be used

to test and understand vulnerabilities." The available exercises include

but are not limited to: Web Pentester,

Web Pentester II, From SQL Injection to Shell, and Introduction to Linux Host

Review.

Metasploit Unleashed

Continuing on with the offensive side of the security house

is Metasploit

Unleashed. For anyone looking to

learn more about Metasploit should start out with this course for a solid

foundation about the framework.

Tuesday, September 24, 2013

Posted by

Corey Harrell

Triage is the assessment of a security event to determine if

there is a security incident, its priority, and the need for escalation. As it

relates to potential malware incidents the purpose of triaging may vary. A few potential questions triaging may address are: is

malware present on the system, how did it get there, and what was it trying to

accomplish. To answer these questions should not require a deep dive

investigation tying up resources and systems. Remember, someone needs to use

the system in question to conduct business and telling them to take a 2 to 4

hour break unnecessarily will not go over well. Plus, taking too much time to

triage may result in the business side not being happy (especially if it occurs

often), the IT department wanting to just re-image the system and move on, and you limit your ability to look in

to other security events and issues. In this post I'm demonstrating one method

to triage a system for a potential malware incident in less than 30 minutes.

The triage technique and the tools to use is something I've

discussed before. I laid out the technique in my presentation

slides Finding Malware Like Iron Man. The presentation also covered the

tools but so has my blog. The Unleashing

auto_rip post explains the RegRipper auto_rip

script and the Tr3Secure

Data Collection Script Reloaded outlines a script to collect data thus

avoiding the need for the entire hard drive. The information may not be new

(except for one new artifact) but I wanted to demonstrate how one can leverage

the technique and tools I discussed to quickly triage a system suspected of

being infected.

The Incident

As jIIr is my personal blog, I'm unwilling to share any

casework related to my employer. However, this type of sharing isn't even

needed since the demonstration can be conducted on any infected system. In this

instance I purposely infected a system using an active link I found on URLQuery. Infecting the system in this manner

is a common way systems are infected everyday which makes this simulation

worthwhile for demonstration purposes.

Responding to the System

There are numerous ways for a potential malware incident to

be detected. A few include IDS alerts, antivirus detections, employees

reporting suspicious activity, or IT discovering the incident while trying to

resolve a technical issue. Regardless of the detection mechanism, one of the

first things that have to be done is to collect data for it to be analyzed. The

data is not only limited to what is on the system since network logs can

provide a wealth of information as well. My post’s focus is on the system's

data since I find it to be the most valuable for triaging malware incidents.

Leverage the Tr3Secure Data Collection Script to collect the

data of interest from the system. The command below assigns a case number of 9-20,

collects both volatile and non-volatile data (default option), and stores the

collected data to the drive letter F (this can be a removable drive or a mapped

drive).

tr3-collect.bat 9-20 F

The second script to leverage is the TR3Secure Data

Collection Script for a User Account to collect data from the user profile of

interest. Most of the time it's fairly easy to identify the user profile of

interest. The detection mechanism may indicate a user (i.e. antivirus logs),

the person who uses the system may have reported the issue, the IT folks may

know, and lastly whoever is assigned the computer probably contributed to the

malware infection. The command below collects the data from the administrator

and stores it in the folder with the other collected data.

tr3-collect-user.bat

F:\Data-9-20\WIN-556NOJB2SI8-09.20.13-11.40 administrator

The benefit to running the above collection scripts over

taking the entire hard drive is twofold. First, collection scripts are faster

than removing the hard drive and possibly imaging it. Second, it limits the

impact on the person who uses the system in question until there is a

confirmation about the malware incident.

Triaging the System

For those who haven't read my presentation

slides Finding Malware Like Iron Man I highly recommend you do so to fully

understand the triage technique and what to look for. As a reminder the triage

technique involves the following analysis steps:

- Examine the Programs Ran on the System

- Examine the Auto-start Locations

- Examine File System Artifacts

When completing those steps there are a few things to look

for to identify artifacts associated with a malware infection. These aren't

IOCs but artifacts that occur due to either the malware characteristics or

malware running in the Windows environment. Below are the malware indicators to

look for as the analysis steps are performed against the data collected from

the system.

- Programs executing from temporary or cache folders

- Programs executing from user profiles (AppData, Roaming,

Local, etc)

- Programs executing from C:\ProgramData or All Users

profile

- Programs executing from C:\RECYCLER

- Programs stored as Alternate Data Streams (i.e.

C:\Windows\System32:svchost.exe)

- Programs with random and unusual file names

- Windows programs located in wrong folders (i.e.

C:\Windows\svchost.exe)

- Other activity on the system around suspicious files

Examine the Programs Ran on the System

The best way to identify unknown malware on a system is by

examining the program execution artifacts. For more information about these

artifacts refer to my slide deck, Harlan's HowTo:

Determine Program Execution post, and Mandiant's Did It Execute? post. To

parse most of the program execution artifacts run Nirsoft's WinPrefetchView

against the collected prefetch files and auto_rip along

with RegRipper against the collected registry hives. Note: the analysis should

be performed on another system and not system being analyzed. Below is the

command for WinPrefetchView:

winprefetchview.exe

/folder H:\ Data-9-20\WIN-556NOJB2SI8-09.20.13-11.40\preserved-files\Prefetch

Below is the command for auto_rip to parse the program

execution and auto-start artifacts:

auto_rip.exe -s

H:\Data-9-20\WIN-556NOJB2SI8-09.20.13-11.40\nonvolatile-data\registry -n H:\

Data-9-20\WIN-556NOJB2SI8-09.20.13-11.40\nonvolatile-data\registry\lab -u H:\

Data-9-20\WIN-556NOJB2SI8-09.20.13-11.40\nonvolatile-data\registry\lab -c

execution,autoruns

Reviewing the parsed prefetch files revealed a few

interesting items. As shown below there was one executable named 5UAW[1].EXE

executing from the temporary Internet files folder and another executable named

E42MZ.EXE executing from the temp folder.

Looking at the loaded modules for 5UAW[1].EXE prefetch file

showed a reference to the E42MZ.EXE executable; thus tying these two programs

together.

Looking at the loaded modules for the E42MZ.EXE prefetch

file showed references to other files including ones named _DRA.DLL, _DRA.TLB,

and E42MZ.DAT.

These identified

items in the prefetch files are highly suspicious as being malware. Before

moving on to other program execution artifacts the prefetch files were sorted by the last modified time in order to

show the system activity around the time 09/20/2013 15:34:46. As shown below

nothing else of interest turned up.

The parsed program execution artifacts from the registry are

stored in the 06_program_execution_information.txt report produced by auto_rip.

Reviewing the report identified the same programs (E42MZ.EXE and 5UAW[1].EXE)

in the Shim Cache as shown below.

C:\Users\lab\AppData\Local\Microsoft\Windows\Temporary

Internet Files\Content.IE5\I87XK24W\5uAw[1].exe

ModTime: Fri Sep 20 15:34:46 2013 Z

Executed

C:\Users\lab\AppData\Local\Temp\7zS1422.tmp\e42Mz.exe

ModTime: Fri Sep 20 15:34:37 2013 Z

Executed

So far the program execution artifacts revealed a great deal

of information about the possible malware infection. However, there are still

more program execution artifacts on a Windows system that are rarely discussed publicly. One of these artifacts I have been using for some time and

there is nothing about this file on the Internet (not counting the few people

who mention it related to malware infections). The artifact I'm talking about

is the C:\Windows\AppCompat\Programs\RecentFileCache.bcf file on Windows 7 systems.

I'm still working on trying to better understand what this file does, how it

gets populated, and the data it stores. However, the file path indicates it's

for the Windows application compatibility feature and its contents reflect

executables that were on the system. The majority of the time the executables I

find in this artifact were ones that executed on the system. The Tr3Secure Data

Collection Script preserves this file and viewing the file with a hex editor

shows a reference to the 5uAw[1].exe file.

Examine the Auto-start Locations

The first analysis step of looking at the program execution

artifacts provided a good indication the system is infected and some leads

about the malware involved. Specifically, the step identified the following

items:

- C:\USERS\LAB\APPDATA\LOCAL\MICROSOFT\WINDOWS\TEMPORARY

INTERNET FILES\CONTENT.IE5\I87XK24W\5UAW[1].EXE

- C:\USERS\LAB\APPDATA\LOCAL\TEMP\7ZS1422.TMP\E42MZ.EXE

- C:\USERS\LAB\APPDATA\LOCAL\TEMP\7ZS1422.TMP\_DRA.DLL

- C:\USERS\LAB\APPDATA\LOCAL\TEMP\7ZS1422.TMP\_DRA.TLB

- C:\USERS\LAB\APPDATA\LOCAL\TEMP\7ZS1422.TMP\E42MZ.DAT

The next analysis step to perform in the triage process is

to examine the auto-start locations. When performing this step one should not

only look for the malware indicators mentioned previously but they should also

look for the items found in the program execution artifacts and the activity on

the system around those items. To parse most of the auto-start locations in the

registry run auto_rip

against the collected registry hives. The previous auto_rip command parsed both

the program execution and auto-start locations at the same time. The parsed

auto-start locations from the registry are stored in the 07_autoruns_information.txt

report produced by auto_rip. Reviewing the report identified the following

beneath the browser helper objects registry key:

bho

Microsoft\Windows\CurrentVersion\Explorer\Browser

Helper Objects

LastWrite

Time Fri Sep 20 15:34:46 2013 (UTC)

{BE3CF0E3-9E38-32B7-DD12-33A8B5D9B67A}

Class => savEnshare

Module => C:\ProgramData\savEnshare\_dRA.dll

LastWrite

=> Fri Sep 20 15:34:46 2013

This item stood out for two reasons. First, the key's last

write time is around the same time when the programs of interest (E42MZ.EXE and

5UAW[1].EXE) executed on the system. The second reason was because the file

name_dRA.dll was the exact same as the DLL referenced in the E42MZ.EXE's

prefetch file (C: \USERS\LAB\APPDATA\LOCAL\TEMP\7ZS1422.TMP\_DRA.DLL).

Examine File System Artifacts

The previous analysis steps revealed a lot of information

about the potential malware infection. It flagged executables in the temp

folders, a DLL in the ProgramData folder, and identified a potential

persistence mechanism (browser helper object). The last analysis step in the

triage process uses the found leads to identify any remaining malware or files

associated with malware on the system. This step is performed by analyzing the

file system artifacts; specifically the master file table ($MFT). To parse the

$MFT there are a range of programs but for this post I'm using TZworks NTFSWalk.

Below is the command for NTFSWalk. Note: the -csvl2t switch makes the output

into a timeline.

ntfswalk.exe -mftfile H:\

Data-9-20\WIN-556NOJB2SI8-09.20.13-11.40\ nonvolatile-data\ntfs\$MFT -csvl2t > mft-timeline.csv

Reviewing the $MFT timeline provides a more accurate picture

about the malware infection. After importing the csv file into Excel and

searching on the keyword 5UAW[1].EXE brought me to the following portion of the

timeline.

The cool thing about the above entry is that the 5UAW[1].EXE

file is still present on the system and it was the initial malware dropped onto

the system. Working my way through the timeline to see what occurred after the 5UAW[1].EXE

file was dropped onto the system showed what was next.

Numerous files were created in the C:\ProgramData\savEnshare

folder. The file names are the exact same that were referenced in the E42MZ.EXE's

prefetch file. The last entries in the timeline that were interesting are

below.

These entries show the program execution artifacts already

identified.

Confirming the Malware Infection

The triage technique

confirmed the system in question does appear to be infected. However, the last

remaining task that had to be done was to confirm if any of the identified items

were in malicious. The TR3Secure Data Collection Script for a User Account

collected a ton of data from the system in question. This data can be searched

to determine if any of the identified items are present. In this instance, the

ProgramData folder was not collected and the temp folder didn't contain the E42MZ.EXE

file. However, the collected the Temporary Internet Files folder contained the 5UAW[1].EXE

file.

The VirusTotal scan against the file confirmed it was

malicious with a 16

out of 46 antivirus scanner detection rate. The quick

behavior analysis on the file using Malwr not only shows the same activity

found on the system (keep in mind Malwr run the executable on XP while the

system in question was Windows 7) but it provided information - including

hashes - about the files dropped into the ProgramData folder.

Malware Incidents Triaging Conclusion

In this post I demonstrated one method to triage a system

for a potential malware incident. The entire triage process takes less than 30

minutes to complete (keep in mind the user profile collection time is dependent

on how much data is present). This is even faster than a common technique

people use to find malware (conducting antivirus scans) as I illustrated in my

post Man

Versus Antivirus Scanner. The demonstration may have used a test system but

the process, techniques, tools, and my scripts are the exact same I've used

numerous times. Each time the end result is very similar to what I

demonstrated. I'm able to answer the triage questions: is malware present on

the system, how did it get there, what's the potential risk to the

organization, and what are the next steps in the response.